Machine Learning with Firebase

It’s undeniable the spotlight that machine learning has been having in the past years, with major companies like Google taking advantage of it, or even claiming, that it is at the center of their products.

The amount of quality data gathered, as well as the domains it covers, will determine which companies of today will dominate the world in the next decade. This requires them to ship products with ML but also to provide tools for 3rd-party developers to adopt.

Even though regulations forbid them to use personally identifiable data gathered by developers, companies will benefit from massive amounts of anonymous statistics that only a world-wide usage can provide, which will be used to improve their learning algorithms and products in the long run.

MLKit

Announced at Google IO 2018 and as part of Firebase, MLKit is one of those tools that aims to democratize the access to ML by letting developers process data on the device or the cloud through the use of a simple set of APIs. These include:

It is possible to add a custom image interpreter or shape detector for more fine-grained control but this comes with a considerable cost, as it will require a lot more work to implement and less support and documentation to resort too.

Project

Creating a custom model on firebase and using MLKit on the mobile apps for detection, appeared to be a good alternative for those who want a specific solution but without a big implementation cost.

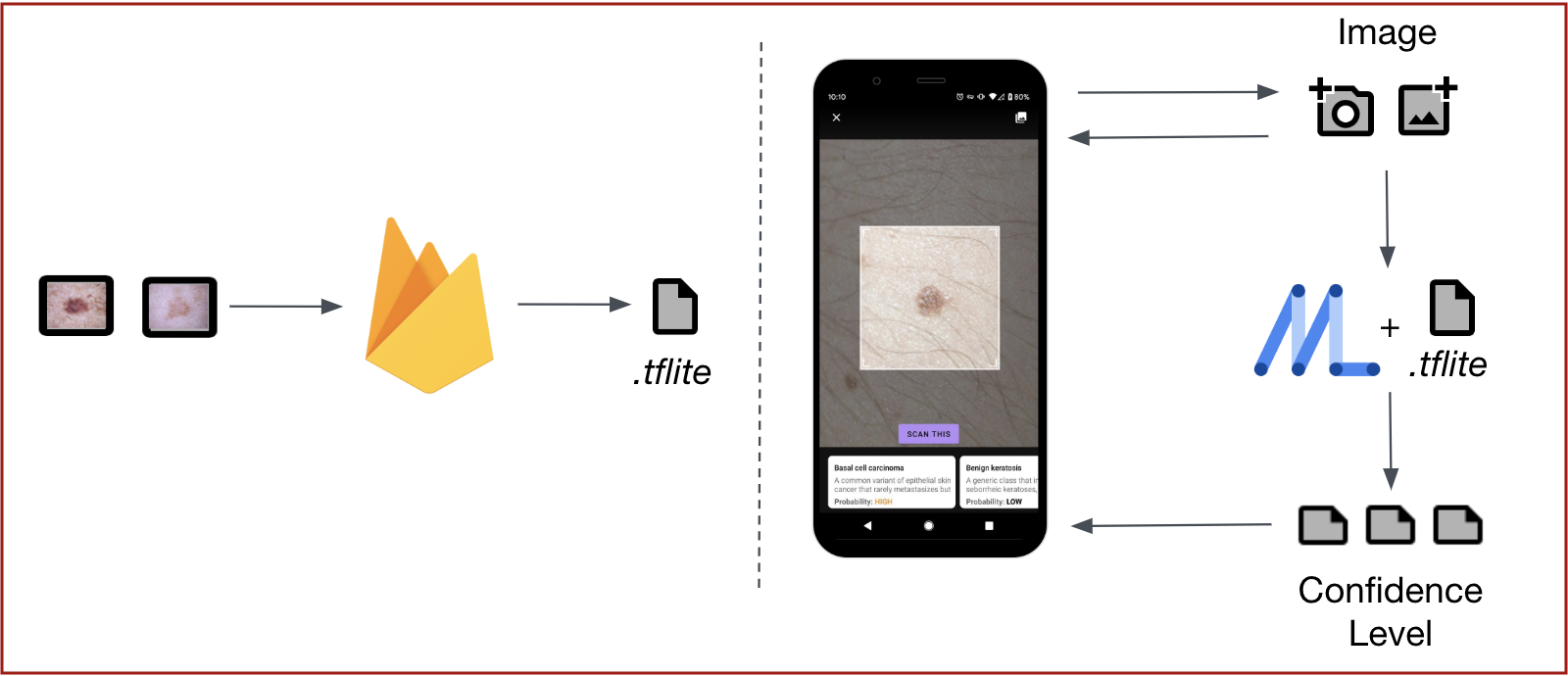

In a team of three as part of a Hackaton, a prototype was created that would take a picture of a skin mole and use MLKit to process and detect signs of skin diseases. This was achieved by first downloading a public dataset of pictures containing skin moles, labeled with their corresponding diseases and then uploading them to firebase which creates and trains a model and then generates a .tflite file.

Next, the mobile application selects an image, either by choosing from the gallery or by taking a picture and then processes it with MLKit against the provided model resulting in a set of confidence levels for each provided label, for example:

- Melanoma - 95%

- Benign Keratosis - 10%

- Dermatofibroma - 5%

Thoughts

Developing a custom model for a specific problem (analysing skin moles) proved to be an interesting challenge because the domain was outside of the 400 provided categories by MLKit’s image labeling capabilities.

Creating a model on firebase required us to make a trade-off between latency and file size of the model versus its accuracy, with the general plan taking an hour and a half to train around 6322 pictures and generating a 3.2mb .tflite model file. As a reference and according to the estimations, training a model with the Higher Accuracy plan would take around 6 hours.

Even though the creation, integration, and use of a custom model in an android app took no longer than half a day on a first try, if we wanted to have a custom shape detector for the app to focus solely on the moles and ignore the rest of the picture, it would be a much bigger challenge due to the very limited existing documentation.

One colleague of mine managed to get it working by following a (long) tutorial that promised to “create a mobile object detector in 30 minutes” but which took almost 3 days to complete due to incorrect shell commands, TPUs not running as expected and much more.

Having explored only the image labeling capabilities of MLKit, the library seems powerful and relatively easy to use for general purposes or proofs-of-concept but be prepared if you need to develop a very custom or data critical application.

Finally, if you plan on using cloud TPUs (tensor processing units) on a trial plan, make sure you turn them off after the use to avoid unnecessary and costly surprises.

QA

Attila Blénesi (@ablenessy)

Android Developer at Babylon Health

1. How do you see the emergence of AI in the software industry?

AI has been a topic for decades, the accesability of compute and data in the past decades enable the topic to boom. Currently the democratisation of AI tools and resources will dominate the next decade, in my opinion.

2. Tell us about any experience you had with machine learning.

I have been working with on-device machine learning on Android for the past few years, focusing on the integration of off the shelf (Vision & object detection) and custom (GAN - Pix to pix) models. Had gone through struggles and sweat in the first stages when TensorFlow Lite was announced, but now the developer experience is much more streamlined. Just in the past year, advances that enable more and more devs to implement features with ease.

3. Would you consider MLKit as a core library of a project?

Definitely yes, If there is a user need that their offerings vision and language custom AI can solve, it cuts down the development process significantly. Enables developers to focus on crafting the user experience, handing over the model deployment, and inference execution to MLKit that provides you with easy to use API’s, using state of the art solutions, best practices in the background.

Resources

- ML Kit by Firebase

- ML Kit Showcase with Material Design by Firebase

- “Create a mobile shape detector in 30 minutes” by TensorFlow